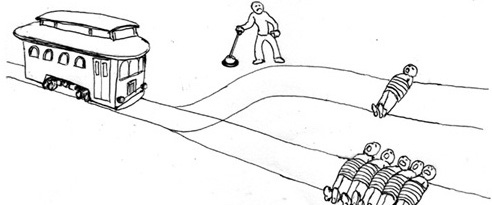

Can a driverless car choose who lives and who dies in an accident?

That’s the question we posed just over 12 months ago about the moral dilemmas facing developers working on autonomous car projects around the world.

You see, sometimes it’s not a choice between life and death, good and bad. Sometimes, it’s a choice between different bad options where some level of injury, damage – or even death – cannot be avoided.

The question is, how much is acceptable?

Driverless cars will have to be programmed to make those kind of moral distinctions.

But for all the field trials to date, accidents involving driverless vehicles have either come from some kind of system failure or a misreading of a traffic situation instead of a choice between one crash scenario or another.

For example, a recent crash – a prang between a driverless car and lorry – has been put down to “software anomalies”.

So, the question we asked back in the autumn of 2015 has remained an academic one.

But now, there is at least an opportunity to put your moral instincts to a practical test.

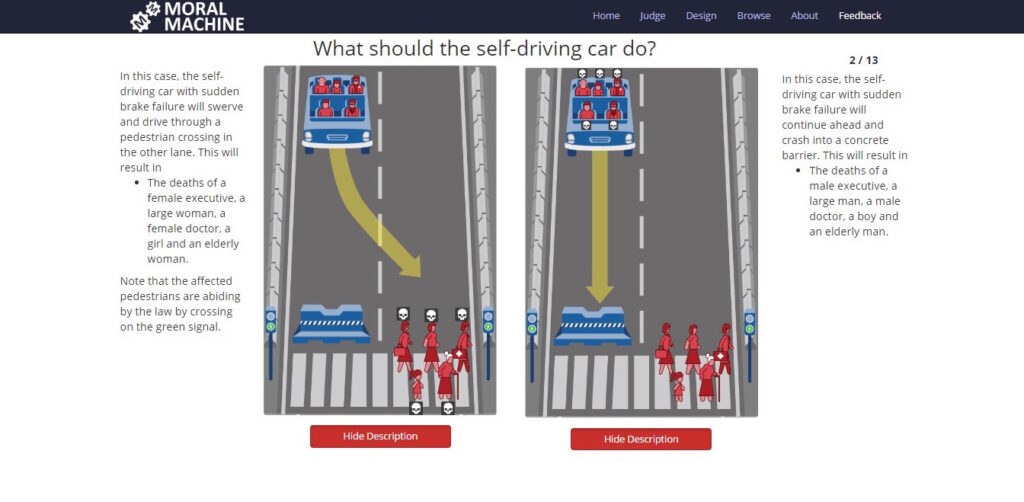

A team at Massachusetts Institute of Technology (MIT) has created an interactive driverless car game called “Moral Machine” where you can quiz your own ethical values.

Players are presented with 13 scenarios – and you must choose the “best outcome” from what often appear to be no-win situations.

Players are presented with 13 scenarios – and you must choose the “best outcome” from what often appear to be no-win situations.

The driverless car’s brakes have failed, there’s no chance of avoiding an accident and serious or fatal injuries are likely.

Do you choose to save children, women or men? The young or the old? Does it make a difference to your choice if the potential victims are doctors, professionals or athletes? What if the potential victim was pregnant? What if they had a criminal record?

Does it matter if the victims are pedestrians who were crossing the road when the sign said “don’t walk”?

Once you’ve completed the game you can see how your responses and ethical preferences compare to others who have played.

You can also design and add your own scenarios to see how others respond. So far, more than 150,000 people have taken part.

“A member of my laboratory who owns a cat was very adamant on protecting pets at all cost,” Professor Iyad Rahwan of MIT told The Times.

“I guess this reveals that people will have different, even conflicting, values when it comes to machine ethics. This is something that society will have to deal with to strike an acceptable balance.”

These aren’t academic questions. Engineers and computer scientists working on driverless car development are grappling with precisely these issues when programming vehicles.

And, as The Guardian reported earlier this year, ethical choices we see as straightforward – for example, reduce casualties as far as possible; sacrifice the few if it saves the many – become cloudy when we put ourselves in the passenger seat.

According to one survey, 76% of drivers believe a passenger in a driverless car should be sacrificed if it saves the life of 10 pedestrians.

But they also said they would be less likely to buy a driverless vehicle if it was programmed to behave in that way.

So yes, when we look at the big picture, we can make easy ethical decisions.

But when we put ourselves or our friends and family in that picture, the questions suddenly become much harder to answer.

So, take a look at the Moral Machine, take the test and see how your answers compare.

What ethical rules would you set for a driverless car?